Here's the fun part.

Outlining

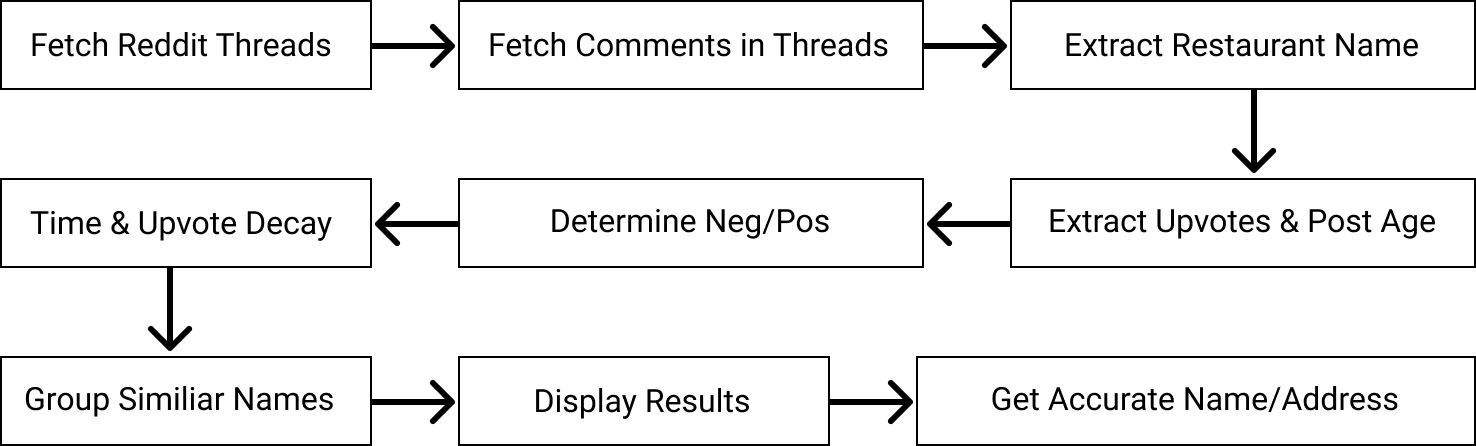

There are four components to pipeline necessary to make this work:

- Locating and Parsing Reddit Data

- Extracting Restaurant Names and Sentiment

- Computing a Popularity Score

- Clustering Restaurant Results

Locating & Parsing Data

The first order of business is locating relevant threads to extract comments from. For this, we can pull results from Google, with the domain limited to reddit.com. While Reddit has a native search functionality, it's not nearly as advanced when it comes to turning up relevant threads. For example: Reddit vs Google

We'll take the top 6 links, which typically provides sufficient data.

request = requests.get(f"https://www.googleapis.com/customsearch/v1?key={API_KEY}&cx={SEARCH_ENGINE_ID}&q={query}").json()

for i, search_item in enumerate(request['items'][:6], start=1):

# extract comments

(Pseudo-code)

From here, we'll want to get a list of comments for each thread. Reddit provides their data as JSON via API, so this is simple to do. We'll also want to run some pre-processing to ensure any unwanted text is stripped out, and newlines are replaced with periods to help with latter processing.

class RedditParser():

def __init__(self, url):

self.url = url + ( "reddit.json" if url[-1] == "/" else "/reddit.json" )

def __parse_c(self, string):

"""

Strip out newlines, replace them with a period, and strip out and repeating spaces.

Remove markdown links, and run preprocessing \

"""

string = re.sub((?:\n|\r|\\n|\\r)+", ". ", re.sub(r"(\.|\?|!|:) *(?:\n|\r|\\n|\\r)+", r"\1 ", string))

string = re.sub(r'\[([^\]]+)\]\([^)]+\)', r"\1", string)

return string.replace('&', '&').replace('\\', "").replace("\t", "").replace('’', "'")

def __rec_pull_replies(self, comment, comments):

for i in comment['replies']['data']['children']:

comments.append((self.__parse_c(i['data']['body']))

self.__rec_pull_replies(i['data'], comments)

Once this is all done, we'll have a list of comments that look like this:

[

{

"body": "X sucks, I went there last week",

"upvotes": 4,

"date": "1557724800",

},

...

]

Restaurant Names And Sentiment

For each restauraunt, it's important to determine if a comment is recommending it, or recommending against it.

To do so, we'll need to find the sentiment of the comment towards the entity. Both named-entity-recognition and sentiment analysis are very popular tasks - however, entity-sentiment analysis is significantly less popular and common libraries don't provide such functionality. (Google's NLP API provides entity sentiment detection, but is cost-prohibitive).

Past studies have used models trained against certain entites to detect sentiment (Meng et al). Other approaches combining entity detection and sentiment analysis using a variety of techniques have also been proposed before, acheiving ~60-80% (Saif et al, Engonopoulos et al). Such approaches however, are not easily accessible as they require either a pre-targeted list of entities or significant training / corpus data.

More primitive methods involve breaking up NER (entity recognition) and sentiment classification into two separate tasks (Batra et al). For our purposes, this will suffice.

To do so, we can extract all the entities from a comment, filter out irrelevant ones (ie, locations), and tag them with the sentiment of the comment. However, since comments can contain both a pro-recommendation and an against recommendation, we'll take the sentence-level sentiment when tagging an entity. This reduces scenerios where entities are improperly tagged. Fortunately for us, restaurant recommendations typically follow similiar formats, making this approach relatively robust, though the trade-off is that we potentially miss against (negative) recommendations that elaborate in further sentences.

Because typically people ask for recommendations (most people don't post asking about restaurants to avoid), we'll assume any "neutral" mention is a positive vote too.

> Don't go to Mulberry. The food sucks. Pizzana much is better.

Mulberry: Sentence Level - Negative, Comment Level - Negative

Pizzana: Sentence Level - Positive, Comment Level - Negative

> I love you for this - thank you! My friend said that the lobster mash at Mastro’s is a bad lol.

Mastro’s: Sentence Level - Positive, Comment Level - Negative

> Well, remember it's also about what YOU like. I think Ruth Chris' steaks SUCK. If I wanted to eat charcoal, I'd eat charcoal. Me? I like Taylor's Steak House. Nice place and great steaks.

Ruth Chris: Sentence Level - Negative, Comment Level - Negative

Taylor's Steak House: Sentence Level - Positive, Comment Level - Negative

> I was at Mastro's last night actually. There's no way that's the best in the city. I mean, it's far from terrible but also far from amazing. See if you can get someone to get you in at magic castle.

(Incorrect) Mastro’s: Sentence Level - Positive, Comment Level - Negative

For sentiment recognition, a relatively simple task, we'll use a RoBERTa based model trained on Twitter data, which provides ~95% accuracy.

Named Entity Recognition in itself is typically also a simple task. However, we want to ensure any entities we extract are restaurants, not people, locations, or other entities.

One approach to this is to check against a comprehensive database of restaurants, such as Google Maps. However, doing so is expensive, and also extremely slow (it's normal for 500 comments to have 750+ entities to check). Instead, with some model ensembling and filtering, we can devise a system to accurately extract restaurants, and restaurants only.

In our case, we used Spacy's RoBERTA Transformer NLP model paired with Flair's Large NER model to cross-check any extracted entities. The entities we are looking for are: 'PERSON', 'FAC', 'ORG', 'WORK_OF_ART', 'PRODUCT.' To solve any disagreements between the two models, we can use Spacy's dependency parser look at syntax and pull certain subjects (ie, nsubj, dobj) in a sentence to cross-reference with any entities picked up by either model. If 2/3 methods agree, an entity is added to the final list.

Naturally, this method does create false positives and negatives. Therefore, we made the assumption that if a restaurant is truly relevant, it'll appear enough times for the model to pick it up, and if something that isn't a restaurant is picked up, it won't appear enough times to throw off the results overall. Because of this margin of error, restaurants below a certain score threshold may not actually be restaurants, or might not have an accurate representation of popularity.

for z in range(len(spacy_sents)):

locations = self.__verify_entity_position(self.__extract_nsubj_dobj_spacy(spacy_sents[z]), self.__extract_notable_entities_spacy(spacy_sents[z].ents), self.__extract_notable_entities_flair(flair_sents[i].get_spans('ner')))

additonal_locations = self.__verify_entity_position(self.__extract_nsubj_dobj_spacy(spacy_sents[z]), self.__extract_notable_entities_flair(flair_sents[i].get_spans('ner')), [])

locations += additonal_locations

We end up with a list like this (after we cluster names. More on that later):

[

{

"Mastro": -1,

"Pizza": 1,

},

{

"Mozzo": 1,

},

...

]

Computing a Popularity Score

Now that we have the essential data needed, we can proceed with computing a popularity score for each restaurant based on the # of upvotes, the age of the comment, and sentiment towards a restaurant.

We'll want to add a time decay to the final score. A 10-year old comment won't be as relevant as a 5 month old comment, as food quality changes. However, this decay should not be linear. Instead, we'll use a exponential decay curve.

min(1, 1 / (0.005*(years_ago-66)) + 4.08)

We'll also want to flatten the upvote score, similiar to Reddit's karma system, to prevent one single, highly upvoted comment from masking everything else. Doing so also reduces the risk that a mis-extracted/clustered restaurant has a big impact on the rest of the listings. Here, we use an logarithmic function.

3 * math.log(abs(raw) + 1, 10) * (1 if raw > 0 else -1)

Since we're taking the absolute value when applying the log, we'll restore the original sign when returning the function. Finally, we just multiply everything together.

time_decay * upvote_score * restaurant_sentiment

Clustering Restaurant Results

Possibly one of the most complex challenges is ensuring that restaurants with similiar names aren't counted twice, or separately. IE, "Subway," "Sub way," and "Subway Sandwich" refer to the same restaurant.

There are several ways to handle this. For one, we can apply some text-similarity algorithms (Hamming, Jaro, Levenshtein distance) or even cosine similiarity with GloVE vectors. One issue with Hamming/Jaro/Levenshtein distance (which looks at the number of operations (edits) needed to make string_a into string_b) is that the phrase "Alexander's Steakhouse" and "Alexander's" would have a high distance (11), meaning the threshold would need to be set abnormally high to catch all forms of restaurant names.

If we want to get even more complex, K-Means Clustering, or better, Affinity Propagation, could be suitable for this task if pair with some distance algorithm.

I found that FuzzyWuzzy's partial_ratio() function (which implements Ratcliff-Obershelp via SequenceMatcher) with minor modifications is generally sufficient. We'll look at the compare longest result in each "cluster" of restaurants with the current one to decide if a name should be added to a cluster, after applying some minor transformations - lower-casing and inversion.

fuzz.partial_ratio(g_longest.lower(), k.lower()) > 80 or fuzz.partial_ratio(g_longest.lower(), ' '.join(k_rev).lower()) > 90

This clustering needs to be applied in two places - once, when parsing a comment, to ensure a restaurant is not counted twice in a comment. Another time, when aggregating the scores across multiple comments. In the former case, we simply drop the extra names, while in the latter case, we'll add up the scores.

We end with something like this:

{

"Mastro": ["Mastro","Mastro's","Mastros"],

"Subway": ["Subway sandwich","subway","the subway"],

"Bread & Butter": ["Bread and butter","bread butter","Bread an Butter"],

...

}

Presenting Data

Finally, it's just a matter of pairing the aggregated restaurant names with their respective scores, a trivial task. We've now tied together a complete pipeline that processes each post and returns a list of recommendations, which we can serve via an REST API.

From the client side, it's a simple call to another external API to retrieve restaurant names from an actual database and populate the listing with business status, and address.

fetch(`https://api.betterfoodrecs.com/api/v1/invoke/?name=${item[0]}&location=${location_c}&food=${food_c}`).then(

(response) => response.json()

).then(

(data) => {

if (data['results'][0]['name'].length > 1){

populate_data(data);

}

}

)

And...there we have it. Suggestions? Questions? Email welton@betterfoodrecs.com

Built by Welton Wang © 2022